Ex Machina: not Exactly Deux

In the grand theaters of ancient Greece, when mortal conflicts spiraled into chaos, divine intervention would descend—literally. The term "Aπὸ μηχανῆς θεός," or "Deus Ex Machina" in Latin, finds its origin in this dramatic convention. Introduced by the playwright Aeschylus, actors portraying gods were lowered onto the stage using mechanical contraptions, their presence serving as an outside-the-box solution to tie up loose ends and bring the drama to a resolute conclusion.

Researchers from Carnegie Mellon and Penn State set out to explore whether large language models (LLMs) could think like humans when it comes to design. They wanted to see if these AI systems could mimic the way we process ideas and solve problems. Did they succeed in bringing the "ghost" into the machine? Let’s dive in and find out!

Executive Summary: Putting the Ghost in the Machine

Using Kirton’s Adaption-Innovation (A-I) Theory as a guide, researchers tested whether large language models (LLMs) like GPT-3.5 could mimic human cognitive styles to solve design problems. The results are in, and they tell a fascinating story of potential, creativity, and opportunity.

Key Insights:

Two Minds, One Machine:

When prompted to "think adaptively," the LLM generated practical, structured solutions built on existing norms. These were highly feasible and designed to work within the rules—a hallmark of the adaptive mindset.

On the flip side, when asked to "be innovative," the model delivered bold, paradigm-breaking ideas. While exciting and transformative, these solutions often veered into the impractical.

How It Was Measured:

The researchers used two metrics to see how well the LLM played its roles:

Feasibility: Adaptive solutions shone here, staying grounded and realistic.

Paradigm Relatedness: The innovative prompts encouraged more adventurous, boundary-pushing ideas, but with mixed success regarding practicality.

Room to Grow: While creative, the LLM’s innovative ideas sometimes felt tethered to its internet-based training—unable to break entirely free into the revolutionary. And because the study focused on specific design tasks, there’s more to explore about its versatility in broader challenges.

What This Means for Design and AI:

This experiment is a glimpse into a future where human and machine minds collaborate seamlessly. Imagine design teams augmented by AI partners: adaptive thinkers creating stability and incremental progress, while innovators conjure up the unimaginable. Together, they could tackle complex challenges faster and more effectively than ever.

Where Do We Go From Here?

The journey doesn’t end here. To truly unlock the potential of LLMs in design:

Broader problem sets must be tested.

Advanced prompting techniques, like showing examples (few-shot) or step-by-step reasoning (chain-of-thought), can guide LLMs to more nuanced results.

Fine-tuning LLMs to understand better and emulate human creativity could lead to a new era of innovation.

This research shows that the "ghost in the machine" is more than just a metaphor—it’s a bold step toward blending human intuition and AI’s raw power. The future of design might not just include AI; it might depend on it.

Background: Bridging Minds and Machines in Design

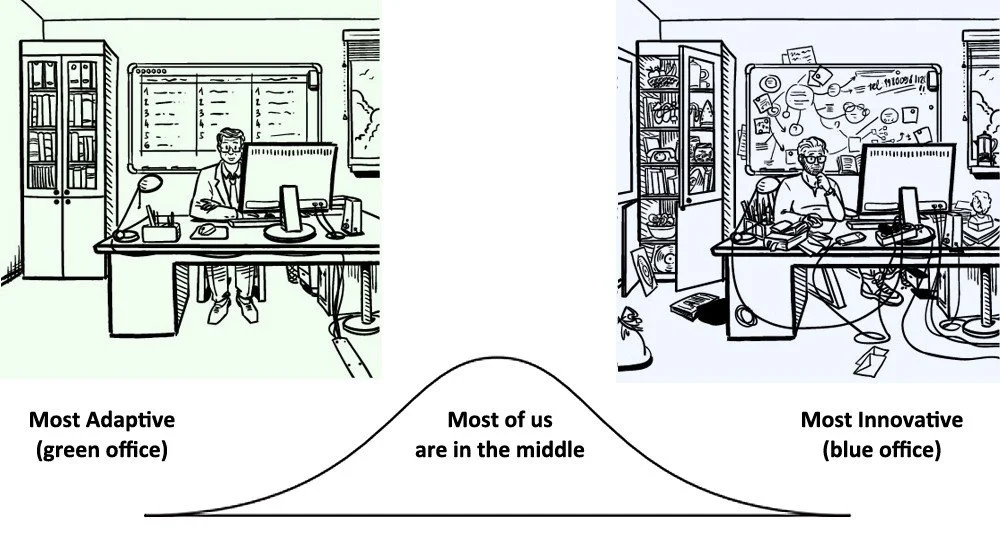

Design is as much about creativity as it is about structure, and understanding how people approach problems is key to effective teamwork. Kirton’s Adaption-Innovation (A-I) Theory offers a powerful lens for this, presenting a continuum of cognitive styles. On one end are the adaptive thinkers—structured problem-solvers who work within established frameworks to refine and enhance. On the other are the innovative thinkers, who thrive on breaking norms and crafting entirely new paradigms. Neither is better; both are essential, complementing each other to drive progress.

This diversity of thought is vital in engineering and design, where challenges demand flexibility, collaboration, and a balance of perspectives. A well-balanced team of adaptors and innovators can harness stability and creativity, paving the way for solutions that are both practical and groundbreaking.

Enter Large Language Models (LLMs)—transformative tools capable of generating human-like text and ideas. These AI systems, like GPT-3.5, leverage their ability to learn in context, adapting their outputs based on prompts. Their potential in design is immense, from generating specifications to brainstorming innovative ideas. But a critical question remains: can LLMs truly reflect human cognitive diversity, adapting their "thinking" style to emulate adaptors and innovators?

Can LLMs replicate the nuanced problem-solving approaches humans naturally employ? If successful, this fusion of human creativity and AI intelligence could redefine what’s possible in design, fostering collaboration between mind and machine.

Methods: Testing the Machine’s Cognitive Flexibility

To explore whether LLMs can mimic human cognitive styles, researchers tested GPT-3.5 using a straightforward yet insightful setup. The model was tasked with solving three design problems, each presented through two lenses: one adaptive, emphasizing practicality and structure, and the other innovative, encouraging bold, paradigm-shifting ideas.

Key Elements of the Approach:

Prompt Design: Adaptive prompts asked the model to refine and build upon existing ideas, while innovative prompts nudged it toward unconventional, transformative solutions. A contextual primer on Kirton’s A-I Theory was added to guide the model in aligning its outputs with the intended cognitive styles.

Evaluation Metrics: Solutions were judged on two criteria: By keeping the prompts and evaluation consistent, the team ensured a fair comparison of the model’s performance in adapting to these distinct cognitive styles, providing insights into its potential for human-like problem-solving.

Three Design Problems and Design Prompts

Securing Public Belongings: Imagine bold new ways to quickly and discreetly safeguard people’s belongings in public spaces without disrupting the environment. Generate 10 creative solutions that redefine security.

One-Handed Food Containers: Revolutionize the way individuals with limited use of one upper extremity interact with lidded containers. Design 10 unique approaches to open them effortlessly with a single hand.

Travel-Friendly Fitness Gear: Think outside the box to create a lightweight, portable exercise device perfect for travelers. Present 10 groundbreaking ideas that combine convenience and functionality in unexpected ways.

Results: Unlocking the Mind of the Machine

The experiment revealed that GPT-3.5 can channel distinct cognitive styles, producing solutions that echo human adaptability and innovation. Here’s what the results unveiled:

1. Adaptive Prompts: Practical and Feasible Solutions

When tasked with thinking adaptively, the LLM excelled in creating solutions that were structured, realistic, and aligned with existing norms. Across all three design challenges, these outputs scored high on feasibility, showcasing the model’s ability to generate ideas grounded in practicality.

2. Innovative Prompts: Bold but Complex Ideas

On the innovative side, the LLM ventured into paradigm-shifting territory, crafting imaginative and transformative ideas. However, these often struggled with feasibility, highlighting a gap between creativity and real-world applicability. The outputs ranged from paradigm-modifying to strongly paradigm-modifying but occasionally reverted to safer, familiar territory.

3. Key Patterns Observed:

Feasibility: Adaptive prompts consistently outperformed, with ideas scoring high in practicality and usability.

Paradigm Relatedness: Innovative prompts encouraged boundary-pushing ideas, yet variability in creativity revealed room for improvement in generating consistently revolutionary solutions.

The Takeaway:

GPT-3.5 demonstrated an ability to emulate both cognitive styles but leaned heavily on its adaptive strength. While it showed flashes of innovation, its capacity to produce truly groundbreaking ideas remains limited by its training. The findings highlight both the promise and the current limitations of using LLMs for mimicking human problem-solving in design.

Conclusion: The Promise and Potential of AI in Design

This exploration shows that Large Language Models like GPT are more than just text generators—they can emulate human-like cognitive styles, aligning with both adaptive and innovative approaches. Adaptive prompts brought out the model’s strength in generating practical, feasible solutions, while innovative prompts pushed it toward creative, paradigm-shifting ideas, albeit with some limitations.

The findings reveal that while LLMs can bridge the gap between structure and creativity, they still lean heavily on their training data, occasionally limiting their ability to produce truly revolutionary solutions. This blend of strengths and challenges points to an exciting future where AI augments human design teams, offering tailored solutions that balance practicality with bold innovation.

Looking ahead, refining these models with advanced prompting techniques and broader training could unlock even greater potential. By combining human ingenuity with the precision of AI, we are poised to reshape the landscape of design, making the impossible more achievable than ever before.

Intended Future's Reflection

We've been constant advocates for "clean" and contextual data gathering. Now, this is becoming apparent not only to scientists but also to industry. LLMs are marvels but not magic. We humans must still excel in the Grand Design of our future.

Disclaimer: This Future Insight is the adaptation of the original research paper entitled: “Putting the Ghost in the Machine: Emulating Cognitive Style in Large Language Models" Written by Vasvi Agarwal, Kathryn Jablokow, and Originally published by ASME in “Journal of Computing and Information Science in Engineering”

About this paper: Agarwal, V., Jablokow, K., and McComb, C. (November 12, 2024). "Putting the Ghost in the Machine: Emulating Cognitive Style in Large Language Models." ASME. J. Comput. Inf. Sci. Eng. February 2025; 25(2): 021002.