The Future of AI: Beyond Generative Models

Many of us have asked ourselves what we should expect from AI in the future. While some people think of Generative AI as a "magic box" full of surprises that eventually bring us into the era of AGI, scientists are looking for alternatives. We're entering the exciting area of Active Inference.

Executive Summary

Generative AI, like ChatGPT and DALL-E, dazzles with its ability to produce human-like text and stunning images. But how deep does this "intelligence" go? Unlike humans, who learn through active engagement with the world, current AI systems learn passively, absorbing vast amounts of data without truly interacting with their environment. This passive learning limits AI's ability to understand and adapt in the way living organisms do. To move towards true artificial intelligence, future AI must learn like we do—through dynamic, sensorimotor experiences that connect actions to their consequences. This shift could unlock a more genuine form of understanding and pave the way for AI that not only mimics human abilities but also shares our depth of comprehension.

Key Takeaways

Generative AI's Impressive Capabilities: AI systems like ChatGPT and DALL-E can generate text and images from simple prompts, showcasing remarkable abilities in mimicking human-like outputs.

Passive Learning vs. Active Engagement: Current AI learns passively by processing large datasets. In contrast, living organisms learn through active, purposeful interactions with their environment, grounding their understanding in real-world experiences.

Limits of Passive AI: Due to their passive learning approach, generative AI systems struggle with genuine understanding and adapting to new, unforeseen situations, unlike humans who continuously learn and adjust through active engagement.

Potential for Future AI: Future advancements in AI could involve integrating sensorimotor experiences, allowing AI to learn through direct interaction with the world, similar to how humans and other organisms do.

Towards Authentic Intelligence: By adopting an interaction-based learning process, AI could develop a more authentic form of intelligence, enhancing its ability to understand, adapt, and perform tasks with human-like comprehension and flexibility.

Implications for AI Development: The shift towards active inference and embodied learning in AI could lead to more robust, adaptable, and genuinely intelligent systems, bridging the gap between current AI capabilities and true artificial understanding.

The difference between a human brain and generative AI principles of operation.

The primary function of the brain in biological systems is not merely to accumulate knowledge but to control interactions with the environment to maintain characteristic states essential for survival. This involves physiological processes like homeostasis and behaviors that extend control through feedback from the environment, known as allostasis. Brains achieve this by predicting and responding to specific interactions that change states in reliable ways, such as reducing hunger by eating or avoiding danger by fleeing.

This predictive capability is central to active inference, where the brain continuously updates its internal models to anticipate and respond to the sensory consequences of its actions.

This form of understanding, grounded in sensorimotor experiences, is crucial for adaptive behavior and precedes explicit knowledge of the world.

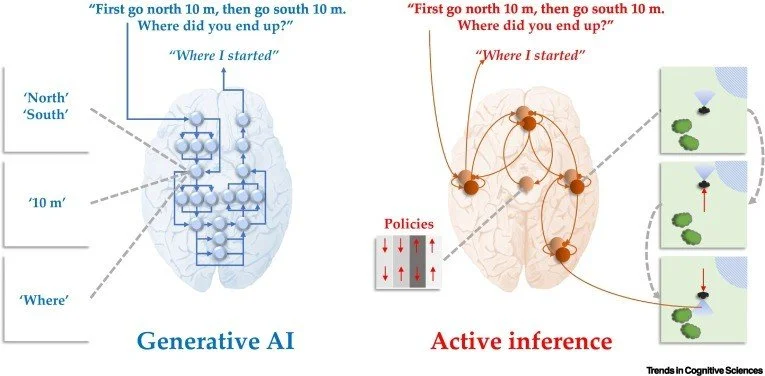

Figure 1. Generative models in generative artificial intelligence (AI) and active inference.

This figure contrasts the ways generative models predict travel destinations using transformer networks in AI and active inference in biological systems. Transformer networks, depicted on the left, use a self-attention structure to emphasize important sequence elements, predicting outputs based on salient information. In contrast, the right side illustrates active inference in the brain, where neuronal systems with reciprocal connections and hierarchical structures compute prediction errors to update beliefs and guide actions. Biological systems use these predictions to influence hidden states and navigate physical space, dynamically updating based on actions and sensory inputs.

Active inference contrasts with the way generative AI systems function. While both systems rely on generative models, the role of these models in living organisms extends beyond prediction to support agency, decision-making, and goal-directed behavior. Biological systems use these models to simulate various scenarios, optimize actions, and plan for future outcomes, all rooted in sensorimotor interactions. In essence, the generative models in active inference allow organisms to learn from and adapt to their environment dynamically, grounding their understanding in real-world experiences. This embodied approach to learning and interaction is what enables living organisms to develop a core understanding of their world, a feature that current generative AI systems, which learn passively from large datasets, lack.

The debate around large language models (LLMs) and other generative AI systems

Generative AI systems, including large language models (LLMs), have sparked considerable debate regarding their understanding and capabilities. Critics argue that these models lack true comprehension and merely manipulate symbols based on statistical patterns in the data they were trained on, without any real grasp of the underlying meaning. This view is supported by examples of LLMs struggling with tasks that require causal reasoning or multi-step logical thinking, as well as instances where they generate incorrect or nonsensical outputs, known as "hallucinations." These limitations highlight the models' disconnect from the real-world experiences and interactions that ground human understanding.

On the other hand, proponents of LLMs suggest that these models exhibit surprising emergent properties and a form of general intelligence. They point to the ability of LLMs to generate coherent, meaningful responses to a wide range of prompts and solve complex problems, suggesting that linguistic training alone might be sufficient for acquiring some level of understanding. This perspective is bolstered by evidence that LLMs can encode conceptual information in ways similar to vision-based models and that they can develop implicit models of the world through extensive training on textual data. The ongoing debate reflects the complexity of defining and measuring "understanding" in AI systems and underscores the need for further research to explore the capabilities and limitations of generative AI.

Is there a hope for generative models?

Generative models in both AI and biological systems work by distilling hidden variables from data to create explanations and predictions, facilitating concept formation. In biological systems, this process, known as active inference, involves grounding understanding in sensorimotor experiences. Living organisms learn through interactions with their environment, linking actions to their consequences, which enables them to abstract key features from data. For example, recognizing a table's various uses—such as placing items, sitting, or taking shelter—comes from direct, meaningful interactions with the object, leading to a deep, embodied understanding of concepts.

Figure 2 shows how generative artificial intelligence (AI) and biological systems might learn generative models to solve the wayfinding task in Figure 1.

Imagine two different ways of learning. First, picture a student named AI. AI sits in a room filled with books and screens, reading and absorbing information all day. AI learns by processing huge amounts of text, adjusting its understanding based on patterns it notices. Sometimes, AI gets a bit of extra help from a teacher who points out what's important. But AI never leaves the room or interacts with the world directly; it just learns from the information given to it.

Now, meet another student named Active. Active learns by exploring the world around them. When Active wants to understand something, like how to get to a specific place, they don’t just read about it—they go out and try different paths. If Active wants to know what happens when they walk north or south, they walk those directions and see where they end up. This hands-on learning helps Active make better predictions and understand the consequences of their actions. Active’s brain is always working, connecting experiences and refining knowledge through real-life interactions.

While AI’s method is about processing and memorizing data, Active’s approach is about exploring, experiencing, and adapting. This story of two learners shows how different methods can shape the understanding and abilities of each, with Active’s real-world interactions providing a richer, more grounded sense of knowledge compared to AI’s book-based learning.

Conversely, generative AI systems learn from large datasets without direct interaction with the physical world. These systems rely on statistical regularities within the data to develop latent variables, reflecting patterns inherent in the input they receive. While AI models can encode deep regularities and generate coherent outputs, they lack the experiential grounding that characterizes biological learning. AI's understanding remains superficial because it does not engage with the world in a way that tests and refines its models through direct, purposeful actions. This passive learning approach limits AI's ability to develop a genuine understanding of the concepts it processes.

Here's an example: for humans and other animals, interacting with the world uses specific properties of objects. Take a table, for instance. You can place things on it, sit on it, or use it for shelter during an earthquake. Even though these uses are different, they all relate to the same object—the table. So, when we think of a "table," we think of all these possible uses. This idea matches studies showing that living beings learn about objects by using them and experiencing their properties, like weight, size, and how easy they are to throw. We understand these concepts through direct experiences with objects and how we can interact with them.

For AI to achieve a more authentic understanding, it would need to incorporate active, sensorimotor-based learning similar to that of living organisms. This means developing AI systems that not only process data but also interact with their environment in a meaningful way, learning from the consequences of their actions. By mimicking the way biological systems ground their knowledge through active inference, AI could potentially move beyond superficial pattern recognition to a deeper, more flexible understanding of the world. This approach would involve integrating physical experiences and interactions, allowing AI to form robust, grounded concepts akin to human understanding.

Active Inference - Minimizing Free Energy

In recent years, a key idea in theoretical neurobiology, machine learning, and AI has been to maximize the evidence, or marginal likelihood, for generative models that explain how observations are caused. This approach, known as evidence-maximization, helps in understanding and making decisions in self-organizing systems, from individual cells to entire cultures. The goal is to find an accurate explanation for observations with the least complexity, a principle captured by the term ‘variational free energy.’

Variational free energy is a balance between complexity and accuracy. Accuracy measures how well a model fits the data, while complexity measures the difference between what we believed before and after seeing the outcomes. In simpler terms, complexity scores the informational cost of changing one's mind. The aim is to create models that explain data in the simplest way possible, aligning with Occam's principle, which suggests that the simplest explanation is usually the best. In the context of AI, this means optimizing models to explain data efficiently, using fewer parameters.

In an active inference setting, which is useful for decision-making, the best plan to follow is the one that minimizes expected free energy. This expected free energy is a combination of risk (the difference between predicted outcomes and preferred outcomes) and ambiguity (the expected inaccuracy of predictions). This approach integrates both optimal Bayesian design and Bayesian decision theory, merging information-seeking and preference-seeking behaviors into one objective. Free-energy minimization happens during both active tasks and rest periods, allowing the brain to optimize models for future use. This process includes simplifying models by removing unnecessary details and generating new data through imagination, which helps prepare for future scenarios. Over evolutionary time, minimizing free energy has likely shaped the structure of animal brains, embedding useful prior knowledge in their neural circuits.

A bright line between generative AI and active Inference?

The key difference between generative AI and active inference lies in the concept of agency. Generative models used in active inference provide AI with the ability to plan and understand actions' consequences. These models encompass a broader scope, offering causal understanding at multiple levels, from sensory experiences to intuitive theories about physical and social interactions. Living organisms develop a genuine understanding of reality through purposeful interactions with their environment, driven by the need to satisfy metabolic needs and remain viable. This embodied intelligence forms the basis for conceptual and linguistic knowledge, grounding their sense of 'mattering' in their agentive actions.

Active inference agents generate meaningful content by acting and intervening in their world, choosing actions that reduce uncertainty and help achieve goals. This approach contrasts with generative AI, which often lacks the capacity for purposive interaction and relies on predicting patterns from data. Although there are efforts to move generative AI towards more embodied and multimodal settings, these systems still struggle with meaningful sensorimotor experiences and interventions. The development of active inference agents represents a promising path toward genuine artificial understanding, but it requires overcoming significant conceptual and technical challenges to enable real-world operation and interaction.

What way forward?

The future of generative AI holds several promising directions. First, AI models could vary in complexity, depending on the number of parameters and the richness of their training data. Another area of development involves using diverse inputs like text, images, and multimodal data to exploit their unique strengths. Additionally, adding capabilities such as engaging in virtual dialogues or commonsense reasoning can enhance AI's versatility. Crucially, shifting from passive data consumption to active, embodied learning—where AI interacts with the world and learns from these experiences—could revolutionize AI's ability to understand and adapt. This approach would mirror human learning more closely and leverage AI's vast data-processing capabilities to develop a deeper, more flexible understanding of the world.

Current AI efforts focus on increasing model complexity but often overlook the potential of active, intelligent data selection. This missed opportunity could be crucial for developing AI systems with a more profound understanding of concepts like effort, resistance, and cause and effect. By prioritizing interactive learning first and using large datasets as a secondary resource, AI could achieve a level of comprehension closer to or even surpassing human flexibility and abstract thinking. While the role of conscious experience in generating authentic meaning remains uncertain, the integration of temporally deep, self-modeling generative systems might naturally lead to qualitative experiences, enriching AI's ability to predict and interact meaningfully. This interaction-first strategy, although not yet fully explored, holds the potential for creating more advanced and general AI.

Conclusion

From an enactivist perspective, there's a clear distinction between generative AI and generalized AI involving active inference and learning. Generative AI creates content like images, code, or text based on given prompts. In contrast, active inference generates causes of content to aid in action selection, known as 'planning as inference.' This approach requires agency, where agents must learn from sensorimotor experiences and interactions with the world. Thus, generalized AI needs to experience the consequences of its actions to understand the causal structure of the world, unlike generative AI, which learns from static data patterns. This means generative AI might not be suitable for applications needing active learning or artificial curiosity, like autonomous robots or vehicles.

It could be argued that generative AI is one of the most beautiful and important inventions of the century – a 21st-century 'mirror' in which we can see ourselves in a new and revealing light. However, when we look behind the mirror, there is nobody there.

Despite its limitations, generative AI can still significantly impact our understanding of the world. It doesn't just reflect our knowledge but also repackages it, potentially revealing connections we hadn't noticed. This capability can enhance how we externalize and scrutinize our thoughts and ideas, offering a new level of self-engineering. While generative AI might be seen as a remarkable invention of the century, serving as a mirror reflecting human understanding, it lacks the depth of true agency and understanding.

Disclaimer: This Future Insight is the adaptation of the original research paper entitled: “Generating meaning: active inference and the scope and limits of passive AI" Written by Giovanni Pezzulo, Thomas Parr, Paul Cisek, Andy Clark and Karl Friston. Originally published by Cell Press in “Trends in Cognitive Sciences”

About this paper:

Pezzulo, G., Parr, T., Cisek, P., Clark, A., & Friston, K. (2024). Generating meaning: active inference and the scope and limits of passive AI. Trends in Cognitive Sciences, 28(2), 97-112.